A newly released tool empowers artists to introduce subtle alterations to the pixels within their artwork prior to uploading it online. This clever strategy ensures that if their creations are incorporated into AI training datasets without permission, they can disrupt the resulting models in unexpected and chaotic ways.

Introducing NightShade

The new tool, NightShade, has positioned itself as a tool for artistic empowerment. It has emerged as a countermeasure against AI corporations that exploit the work of artists to train their models, often sidestepping the creator’s consent. By using NightShade to poison this training data, the potential consequences for forthcoming iterations of image-generating AI models such as DALL-E, Stable Diffusion, and Midjourney are profound.

The new tool could make confusing transformations that will make something like a spade turn into a cat, a car turn into a cow, and so forth. The research was given an exclusive preview at the MIT Technology Review and is to be submitted for peer review at the Usenix computer security conference.

Ben Zhao, a professor at the University of Chicago who helped develop NightShade, aims to help swing some of that power back in artists’ favour. If all goes according to plan, NightShade will be an adequate deterrent against AI enterprises infringing on the copyrights and intellectual property rights of artists.

Disrupting AI Models Through Data Poisoning

Legal battles involving AI giants, including OpenAI, Meta, Google, and Stability AI, have seen artists claim their personal information and copyrighted works have been harvested without consent or compensation. However, these allegations were met with silence as Meta, Google, Stability AI, and OpenAI all failed to comment when asked by MIT Technology Review.

Glaze and Nightshade: Shielding Artistic Style from AI Scrutiny

Zhao’s team has also introduced Glaze, a tool enabling artists to “Mask” their distinctive style, safeguarding it from AI companies’ prying eyes. The mechanics of Glaze parallel Nightshade, subtly manipulating image pixels to confound machine-learning models without human perception. The blueprint includes the integration of NightShade into Glaze, giving artists the choice to employ the data-poisoning tool.

Furthermore, the team has committed to an open-source approach, permitting others to tinker with and craft their own versions of NightShade. The potency of the tool amplifies with its increased adoption, as large AI models ingest billions of images into their datasets, increasing the impact of poisoned data.

NightShade’s Targeted Approach: Exploiting AI Model Vulnerabilities

NightShade exploits an inherent vulnerability in generative AI models stemming from their vast training datasets, primarily extracted from the internet. The tool deliberately interfered with these images. For artists seeking to share their creations online without the risk of exploitation by AI conglomerates, Glaze offers a solution. Artists can choose to conceal their unique style, rendering it imperceptible to AI surveillance.

Additionally, they have the option to employ NightShade. When AI developers scour the internet for supplementary data to refine or construct AI models, these poisoned samples infiltrate the model’s dataset, inducing dysfunction. Eliminating this data proves to be tough, as tech companies must painstakingly hunt down and expunge such corrupted data.

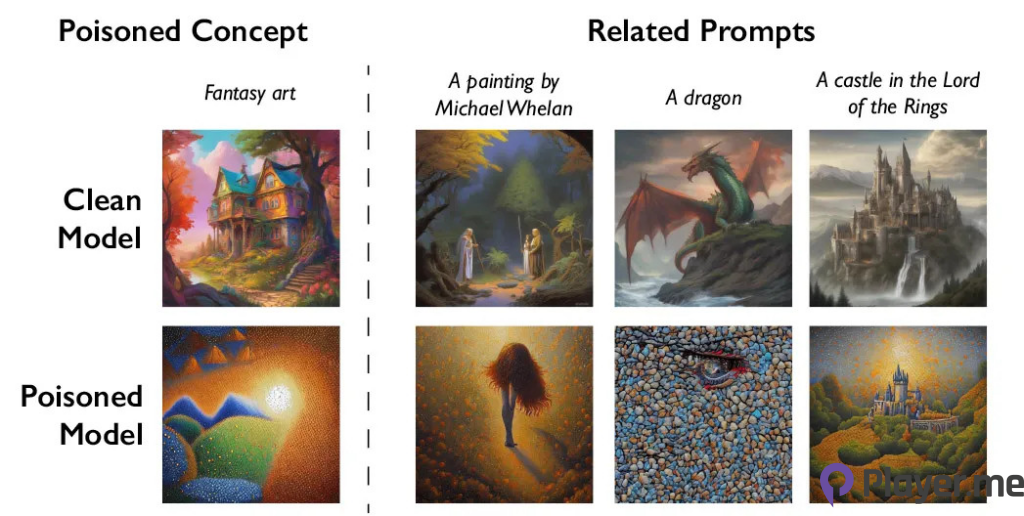

The research team conducted experiments on Stable Diffusion’s latest models and on a self-trained AI model. Just 50 poisoned dog images were fed to Stable Diffusion. leading to bizarre outputs. This included creatures with excessive limbs and cartoonish features. With 300 poisoned samples, Stable Difusion, under manipulation, transformed images of dogs into feline counterparts.

Addressing Misuse and Building Defences

Generative AI models excel at establishing associations between terms, facilitating the spread of this digital poison. NightShade contaminates not only the word “Dog” but also related concepts like “Puppy”, “Husky” and “Wolf”. This attack extends to tangentially related images as well. For instance, if a model encountered a poisoned image under the prompt “Fantasy Art”, related prompts like “Dragon” would similarly undergo distortion.

Zhao acknowledges the risk of potential misuse of the data poisoning technique for nefarious purposes. However, he contends that attackers would require thousands of poisoned samples to significantly impact larger, more robust models that train on billions of data samples. Vitaly Shmatikov, a Cornell University professor specialising in AI model security, urges vigilance in developing defences against these attacks.

A Robust Deterrent

Gautam Kamath, an assistant professor at the University of Waterloo specialising in data privacy and AI model resilience, lauds this work as fantastic. The research underscores that vulnerabilities persist in new AI models, especially as they gain more power and trust. Consequently, the stakes continue to rise over time.

Junfen Yang, a computer science professor at Columbia University, underscores the potential impact of NighShade. It could compel AI companies to show greater respect for artists’ rights, potentially leading to more equitable compensation for artists.

Empowering Artists’ Control Over Their Work

AI companies like Stability AI and OpenAI have allowed artists to opt out of having their images used in training future models. However, artists contend that this is insufficient. Artists like Eva Toorenent hope that NighShade will change the landscape, compelling AI companies to think twice before appropriating artists’ work without consent.

The newfound empowerment provided by tools like Glaze and NightShade has instilled confidence in artists like Autumn Beverly. Beverly withdrew her work from the internet after it was scraped without her authorisation into the popular LAION image database. These tools, she believes, can restore power to the artists over their own creations.

Alongside Glaze, NightShade has become a ray of hope for artists trying to shield their own styles from AI inspection. Because the programme is open-source, more people will use it and modify it to protect their works of art. Now that the weaknesses in generative AI models have been made public, artists can take steps to safeguard their creative works.

Also Read: Interactive AI Will Be the New AI Beyond 2023 After Mustafa’s Mention “Technology Today Is Static”

Author Profile

Latest entries

GAMING2024.06.12Top 4 Female Tekken 8 Fighters to Obliterate Your Opponents in Style!

GAMING2024.06.12Top 4 Female Tekken 8 Fighters to Obliterate Your Opponents in Style! NEWS2024.03.18Elon Musk’s SpaceX Ventures into National Security to Empower Spy Satellite Network for U.S.

NEWS2024.03.18Elon Musk’s SpaceX Ventures into National Security to Empower Spy Satellite Network for U.S. GAMING2024.03.17PS Plus: 7 New Games for March and Beyond

GAMING2024.03.17PS Plus: 7 New Games for March and Beyond GAMING2024.03.17Last Epoch Necromancer Builds: All You Need To Know About It

GAMING2024.03.17Last Epoch Necromancer Builds: All You Need To Know About It