As a prominent player in GPU and AI advancements, NVIDIA consistently stays at the forefront of chip development, competing with well-established companies like AMD and Intel. Their continuous engagement in the AI chip competition underscores their commitment to innovation, and their recent unveiling of the NVIDIA HGX H200 for generative AI servers further exemplifies their dominance in the field.

Introducing the NVIDIA HGX H200

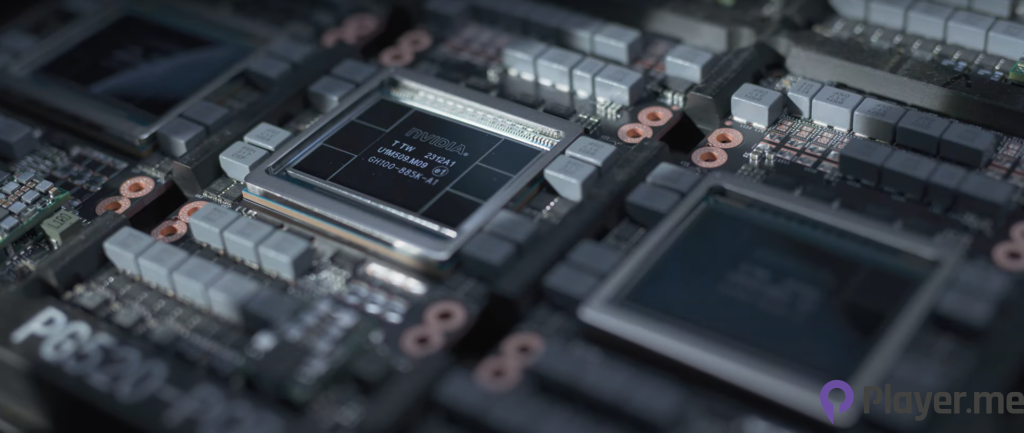

NVIDIA’s new top-of-the-line chip for AI work, the NVIDIA HGX H200 is announced to the public on Monday, 13th November. It is a GPU crafted specifically for the training and deployment of AI models that drive the surge in generative AI capabilities.

The latest NVIDIA HGX H200 is based on the advanced H200 Tensor Core GPU with enhanced memory capacity, capable of managing extensive data loads for tasks in generative AI and high-performance computing. Besides, this recently introduced HGX H200 is built upon NVIDIA’s Hopper architecture.

Related: NVIDIA Unveils Cutting-Edge AI Chip Configuration for Enhanced Performance

NVIDIA HGX H200 Is Better Than the HGX H100

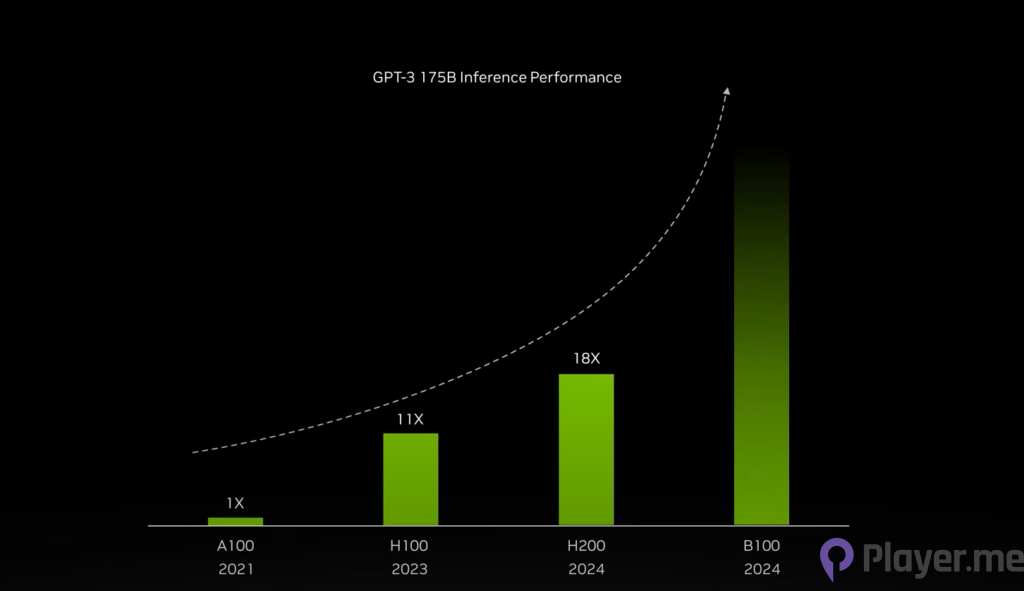

NVIDIA HGX H200 GPU represents an advancement over the predecessor – HGX H100, the processor employed by OpenAI in training its cutting-edge large language model, GPT-4.

How Is the NVIDIA HGX H200 Better Than the HGX H100?

The NVIDIA HGX H200 seems largely identical to the HGX H100 except for its memory component. However, the alterations in its memory constitute a significant improvement. The new GPU is the pioneer in adopting a swifter memory specification known as HBM3e. The HBM3e memory is designed to facilitate the chip’s “Inference” capabilities, allowing it to utilise a trained model for generating text, images, or predictions. The NVIDIA HGX H200 boasts a 1.1 TB HBM3e memory and elevates the GPU’s memory bandwidth to 4.8 TB per second, a boost from the HGX H100’s 3.35 TB per second, and increases its overall memory capacity to 141 GB, compared to its predecessor’s 80 GB.

The capacity is nearly doubled and 1.4x more bandwidth compared with its predecessor, the NVIDIA A100. Besides, NVIDIA asserts that the latest chip is projected to provide almost twice the inference speed when executing the Llama 2 large language model, surpassing the performance of the HGX H100.

In fact, NVIDIA did highlight the benefits of updating the HBM memory in this new NVIDIA HGX H200 chip. According to Ian Buck, NVIDIA’s Vice President of High-Performance Computing Products, in a video presentation released on 14th November: “The inclusion of quicker and larger HBM memory enhances performance in computationally intensive tasks, such as generative AI models and high-performance computing applications. This optimisation leads to increased GPU utilisation and efficiency.”

The Importance of Memory Upgrades in GPU Chips Designed for Generative AI

Ian Buck also stated, “To create intelligence with generative AI and HPC applications, vast amounts of data must be efficiently processed at high speed using large, fast GPU memory.” This reflects the importance of memory upgrades for better GPUs that then affect generative AI performances.

Enhanced memory, characterised by increased speed and capacity, enables GPUs to efficiently handle complex and data-intensive tasks, particularly in fields like generative AI and high-performance computing. A memory upgrade equips GPUs to swiftly retrieve and process large datasets, leading to improved computational speed and optimised utilisation. This is crucial for tasks such as training and running advanced AI models, where rapid data access is vital. Additionally, a larger memory capacity allows GPUs to accommodate multiple tasks simultaneously, fostering smoother multitasking capabilities. As technologies advance, upgrading GPU memory ensures compatibility with evolving software demands and contributes to the sustained efficiency and future-proofing of computing systems.

And with that, Ian Buck also stated, “With NVIDIA H200, the industry’s leading end-to-end AI supercomputing platform just got faster to solve some of the world’s most important challenges.”

The Distribution of NVIDIA HGX H200

Large corporations, emerging businesses, and government entities are all competing for a restricted quantity of these NVIDIA HGX H200 chips.

The firm announced that major cloud services, such as Microsoft Azure, Amazon Web Services, Google Cloud, and Oracle Cloud Infrastructure, have already committed to acquiring the recently introduced NVIDIA HGX H200 GPU. This GPU is adaptable for deployment in configurations of four-way and eight-way, ensuring compatibility with previous HGX H100 hardware and software systems.

Furthermore, several server hardware collaborators, such as ASRock Rack, Asus, Dell, GIGABYTE, Hewlett Packard Enterprise, Lenovo, and numerous others, will have the capability to enhance their existing HGX H100 systems with the latest NVIDIA HGX H200 chip.

The anticipated release of the NVIDIA HGX H200 is scheduled for the second quarter of 2024, positioning it in competition with AMD’s MI300X GPU. Similar to the NVIDIA HGX H200, AMD‘s chip boasts augmented memory compared to its forerunners, facilitating the accommodation of large models on the hardware for efficient inference processing.

How Much Are the NVIDIA HGX H200 Chips?

Upon the release of NVIDIA HGX H200, the forthcoming chips are expected to come with a significant price tag. Although NVIDIA have not disclosed the specific costs, according to CNBC, the preceding HGX H100s are priced in the range of $25,000 to $40,000 each. Given that operating at peak levels often requires thousands of these chips, the overall investment can be substantial. It’s worth noting that pricing is determined by NVIDIA’s partners, as stated by Kristin Uchiyama, NVIDIA’s spokesperson.

Also Read: NVIDIA Earnings: Stock Soars as AI Giant Again Smashes Quarterly Expectations

Will the NVIDIA HGX H200 Affect the HGX H100?

NVIDIA’s announcement comes amid a fervent demand for its HGX H100 chips within the AI industry. These chips are highly regarded for their efficiency in processing the vast amounts of data required for training and operating generative image tools and large language models. The scarcity of HGX H100s has elevated their value to the extent that companies are leveraging them as collateral for loans. The possession of HGX H100s has become a prominent topic in Silicon Valley discussions, prompting startups to collaborate in sharing access to these coveted chips.

According to Uchiyama, the introduction of the NVIDIA HGX H200 will not impact the HGX H100 production, as NVIDIA plans to augment the overall supply continuously throughout the year, with a long-term commitment to securing additional supply. Despite reports of NVIDIA tripling HGX H100 production in 2024, the heightened demand for generative AI suggests that the demand for these chips may remain robust, especially with the introduction of the even more advanced NVIDIA HGX H200.

A Sum Up

The field of generative AI is advancing swiftly, driven by a pronounced demand for innovations, particularly in the GPU chips that serve as the driving force for the efficient operation of generative AIs. Each introduction of GPU chips is succeeded by subsequent releases from various companies. Beyond the anticipated performance enhancements from the new NVIDIA HGX H200 chip, NVIDIA’s planned chip development, and competitors, the accelerating growth of AI signals that there is much more to anticipate in the future.

Author Profile

Latest entries

GAMING2024.06.12Top 4 Female Tekken 8 Fighters to Obliterate Your Opponents in Style!

GAMING2024.06.12Top 4 Female Tekken 8 Fighters to Obliterate Your Opponents in Style! NEWS2024.03.18Elon Musk’s SpaceX Ventures into National Security to Empower Spy Satellite Network for U.S.

NEWS2024.03.18Elon Musk’s SpaceX Ventures into National Security to Empower Spy Satellite Network for U.S. GAMING2024.03.17PS Plus: 7 New Games for March and Beyond

GAMING2024.03.17PS Plus: 7 New Games for March and Beyond GAMING2024.03.17Last Epoch Necromancer Builds: All You Need To Know About It

GAMING2024.03.17Last Epoch Necromancer Builds: All You Need To Know About It